How AI Fraudsters Tried to Become Me — and What It Means for Every Investor

Access Swiss site

Access Swiss site

Articles

SHARE THIS POST:

A few months ago, I received a polite email from an investor asking about a “Zoom meeting I had invited him to.”

Except I hadn’t invited him.

He forwarded the email, and for a moment, I thought it was mine — same logo, same tone, even the same sign-off:

Warm regards, Taimour Zaman, Founder, AltFunds Global

The meeting link was fake. The email domain was one character off. The sender wasn’t me — it was an AI-generated impersonation.

That was the day I realized: AI wasn’t just changing the world — it was pretending to be me.

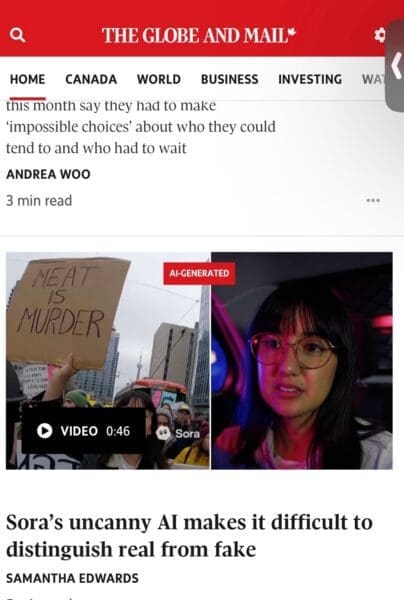

Soon after, The Globe and Mail ran a story that perfectly captured what I was living:

“Sora’s uncanny AI makes it difficult to distinguish real from fake.”

The journalist, Samantha Edwards, wasn’t exaggerating. She was documenting the new reality — a world where text prompts can fabricate videos, voices, and even people.

What she didn’t know was that fraudsters were already using these same tools to create deepfake versions of me — complete with synthetic contracts, fake compliance letters, and AI-generated Zoom calls.

They weren’t hacking systems.

They were hijacking identity.

For centuries, humans trusted what they could see. In 2025, that trust has collapsed.

AI tools like Sora, ChatGPT, and voice-cloning engines can now generate “proof” that feels real: documents, voices, even video statements.

The danger?

It’s not science fiction anymore — it’s scalable deception.

Fraudsters can make a fake “CEO” ask for payment, fabricate “proof of funds” letters, or issue fraudulent “partnership offers” that look indistinguishable from reality.

And because the details are so convincing — logos, language, structure — victims fall fast.

At AltFunds Global, we realized we couldn’t rely on old-world verification in a new-world crisis.

So we built new defenses:

These aren’t just policies. They’re survival tools for trust.

Switzerland’s FINMA, along with FATF and Europol, is studying the intersection of AI and financial crime — because synthetic identities aren’t just a threat to brands like ours. They’re a threat to the stability of capital markets.

A fake video of a CEO “announcing a funding deal” can move millions.

A fake compliance letter can open international accounts.

That’s why AFG’s guiding principle remains:

Technology can accelerate truth — but it should never manufacture it.

Fraud thrives in silence. Truth survives in sunlight.

AI didn’t create dishonesty — it just made it faster.

The real fight is for authenticity in a world that rewards illusion.

So yes, I’ve been impersonated. My voice, my words, my digital identity — stolen and repackaged by algorithms.

But what no machine can clone is character.

And that’s what ultimately wins — in finance, in reputation, in life.

Taimour Zaman is the Founder of AltFunds Global AFG AG, based in Zug, Switzerland. His firm structures alternative capital solutions for accredited investors, with a focus on ethical finance, regulatory integrity, and digital trust.

👉 Ready to take the next step? Book your private call here.

SHARE THIS POST: